Implementing fast TCP fingerprinting with eBPF - part 2

This is the second part of my series on implementing fast TCP fingerprinting in a golang webserver, using eBPF.

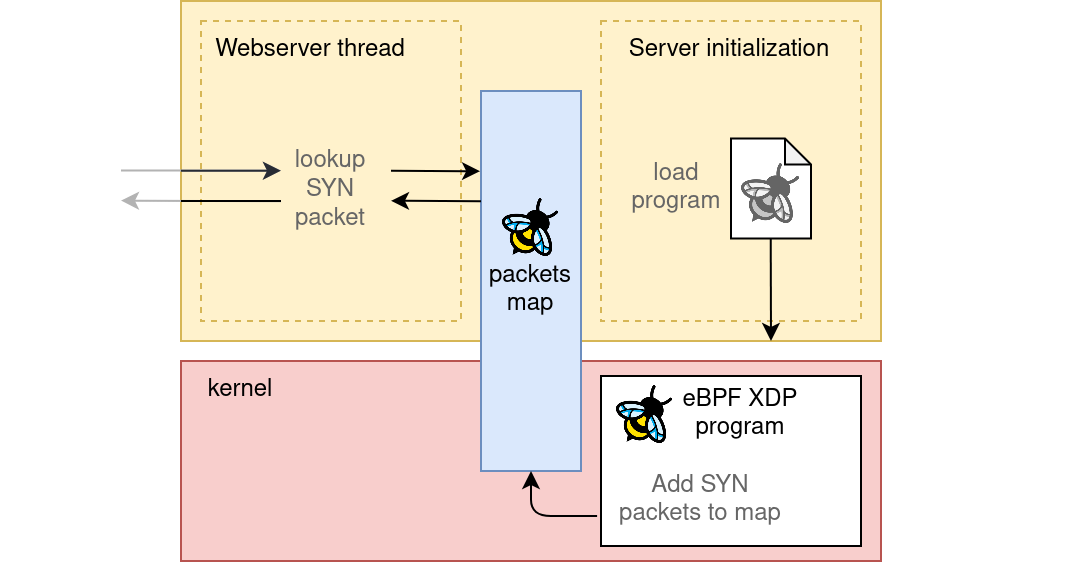

In the previous article I focused on the theory behind TCP fingerprintng, introduced the core

concepts behind eBPF, and laid out the base architecture we are going to follow.

This article is going to focus on the actual development.

The goal

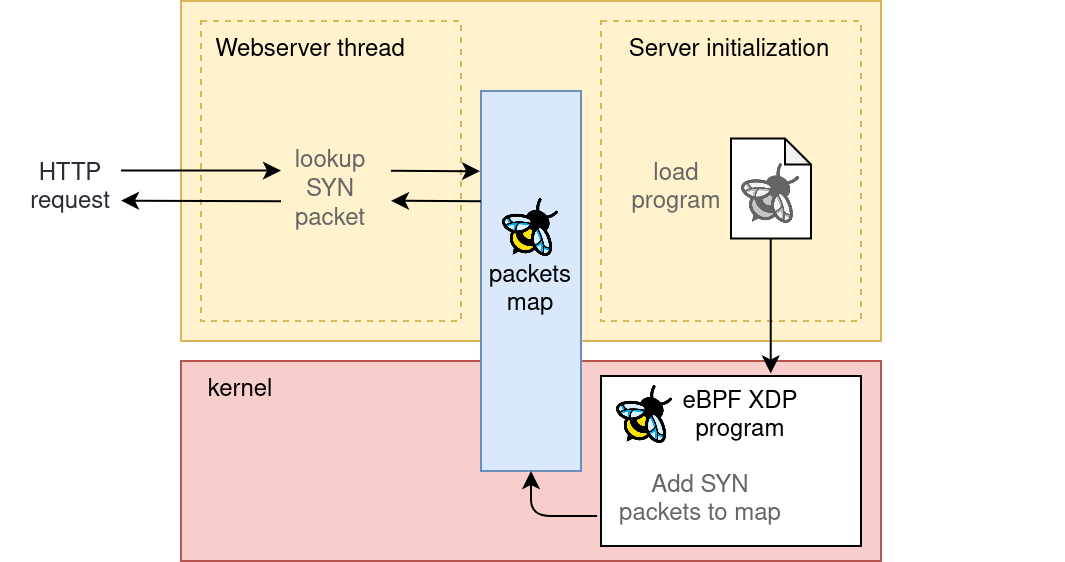

Just to recap the important informations, our goal is to write a web server that will echo back to the visitor informations taken from their TCP handshake.

We are going to use the cilium eBPF library, wich provides an easy way to interact with an eBPF program drectly from GO, without dependencies on C or LibBpf. We can take care of all the boilerplate simply by following the cilium ebpf getting started guide, which defines an example eBPF XDP program managed and executed with golang.

The golang side

There isn’t anything particularly interesting in the user space side of the project. The introductory template from the cilium eBPF project already shows the usage of a shared EBPF map: the map is defined in the C side like this:

// ebpf.c

// ...

struct {

__uint(type, BPF_MAP_TYPE_ARRAY);

__type(key, __u32);

__type(value, __u64);

__uint(max_entries, 1);

} pkt_count SEC(".maps");

And thanks to the autogenerated boilerplate code it can be easily queried from the golang side:

var count uint64

err := objs.PktCount.Lookup(uint32(0), &count)

Note that the eBPF system offers several MAP types.

This current example is defining an array, but we can easily change it into

a BPF_MAP_TYPE_HASHAMP, which is what we actually need for our project.

We can then write a regular golang webserver, and query that hashmap using the

ip+port of the client when we receive a connection.

In the web framework provided by the standard library the remoteAddr is not included

by default, so we’ll need to manually add it to the request context :

// ...

type remoteAddrKeyType struct{}

var remoteAddrKey = remoteAddrKeyType{}

httpServer := &http.Server{

Addr: net.JoinHostPort("", "8080"),

ConnContext: func(ctx context.Context, c net.Conn) context.Context {

tcpConn := c.(*net.TCPConn)

return context.WithValue(ctx, remoteAddrKey, tcpConn.remoteAddr())

},

}

http.HandleFunc("/", func(w http.ResponseWriter, r *http.Request) {

remoteAddr, _ := r.Context().Value(remoteAddrKey).(string)

remoteIP, remotePort, _ := net.SplitHostPort(remoteAddr)

tcpData := bpfProbe.Lookup(remoteIP, remotePort),

logger.Printf(

"client IP: %s, client port: %s, TCP data: %s",

remoteIP,

remotePort,

tcpData

)

})

httpServer.ListenAndServe()

The eBPF side

The interesting part of the project resides in the eBPF side: We need to collect the TCP SYN packets that are directed to the server, and store them in a eBPF hash map.

All eBPF programs can be seen as short-lived function callbacks that get executed

after a certain event, depending on the program type.

There are several program types,

what we need to figure out is what program type will give us fast access to the data we need.

Strictly speaking, there are only three possible candidates:

option 1: Kernel probes

Kernel probes allow us to hook into almost all functions inside the kernel. In order to get our TCP SYN, we could hook into one of the core functions that handle TCP connections.

There are several detailed guides that describe

the part of the networking stack we are interested about.

Regarding TCP specifically, a good function to hook can be tcp_conn_request(), which handles TCP SYN packets directed

to a listening socket. That would give us access to

both the SYN packet we need and the socket it’s directed to, which is exactly what we need:

SEC("kprobe/tcp_conn_request")

int kprobe_tcp_conn_request(struct pt_regs *ctx)

{

struct sock *sk = (struct sk *)PT_REGS_PARM2(ctx); //listening socket

struct sk_buff *skb = (struct sk_buff *)PT_REGS_PARM3(ctx); //syn packet

// the rest of our program

// ...

}

The issue with this approach is that kernel probes are not intended to be used this way. This hook will introduce overhead, and since the function and structs referenced are not part of a stable interface there are no guarantees that they won’t change across kernel versions. We can probably work around both these issues by running our webserver in a dedicated network namespace and by writing our eBPF with CORE, which maintains compatibilty across kernel versions. But this is clearly not the right path to follow.

option 2: tracepoints

Tracepoints are more stable than kernel probes, and more lightweight compared to packet hooks for the simple reason that they get triggered with less frequency if choosen carefully. This useful article by Brendan Gregg introduces the TCP tracepoints provided by the linux kernel.

The problem with this approach is that tracepoints don’t provide the data we need.

We can use bpfrtrace to list tracepoints and inspect their signatures. The most relevant one is probably sock:inet_sock_set_state, and we

can easily see that it does not provide any sk_buff:

sudo bpftrace -lv "tracepoint:sock:inet_sock_set_state"

tracepoint:sock:inet_sock_set_state

const void * skaddr

int oldstate

int newstate

__u16 sport

__u16 dport

__u16 family

__u16 protocol

__u8 saddr[4]

__u8 daddr[4]

__u8 saddr_v6[16]

__u8 daddr_v6[16]

option 3: XDP

This leaves us with XDP programs, which is one of the intended ways to handle network packets.

SEC("xdp")

int find_tcp_syns(struct xdp_md *ctx) {

// Pointers to packet data

void *data = (void *)(long)ctx->data;

void *data_end = (void *)(long)ctx->data_end;

// The rest of our program

// ...

}

This program will run for every packet received by the network interface we’ll bind it to, which is why it’s important that we return from it as soon as we know that a packet is not relevant.

Most of the code will just be responsible for detecting a TCP SYN directed to our server:

SEC("xdp")

int find_tcp_syns(struct xdp_md *ctx) {

// Pointers to packet data

void *data = (void *)(long)ctx->data;

void *data_end = (void *)(long)ctx->data_end;

// ### ETH layer

struct ethhdr *eth = data;

if ((void *)(eth + 1) > data_end)

return XDP_PASS;

if (bpf_ntohs(eth->h_proto) != ETH_P_IP)

return XDP_PASS;

// ### IP layer

struct iphdr *ip = (struct iphdr *)(eth + 1);

if ((void *)(ip + 1) > data_end)

return XDP_PASS;

char is_fragment = __bpf_ntohs(ip->frag_off) & (IP_MF | IP_OFFSET);

if (is_fragment)

return XDP_PASS;

if (ip->protocol != IPPROTO_TCP)

return XDP_PASS;

int ip_hdr_len = ip->ihl * 4;

if (ip_hdr_len < sizeof(struct iphdr)) {

return XDP_PASS;

}

if ((void *)ip + ip_hdr_len > data_end) {

return XDP_PASS;

}

// check that the destination addr matches the listen addr

// of the golang server. dst_port is provided at execution

// time by the golang side, already in network byte order.

if (ip->daddr != dst_ip)

return 0;

// ### TCP layer

struct tcphdr *tcp = (struct tcphdr *)((unsigned char *)ip + ip_hdr_len);

if ((void *)(tcp + 1) > data_end) {

return XDP_PASS;

}

if (!(tcp->syn) || tcp->ack) // SYN-only (no SYN-ACK)

return XDP_PASS;

// check that the destination port matches the listen port

// of the golang server. dst_port is provided at execution

// time by the golang side, already in network byte order.

if (tcp->dest != dst_port)

return XDP_PASS;

// Success! this is a TCP SYN we care about.

}

The shared hashmap

There is only one last connecting element to think about; the implementation of the shared hashmap.

The plan is to store and retrieve TCP SYN packets in the eBPF hasmap using the packet IP + TCP PORT tuple as a key.

We need to make sure that the key we generate will have the same binary

format in both the C and GO side.

On the C side things are easy:

//ebpf.c

inline __u64 makeKey(__u32 ip, __u16 port) {

//the end key is a composite of address and port, both in big endian order.

return ((__u64)ip << 16) | port;

}

On the golang side we must be extra careful:

network packets store numbers in network byte order, which is Big endian.

The Ip and port we manage in the eBPF side are read straight from a packet, and are therefore

Big endian, even thought the endianness of the program itself will depend on the host.

The same applies to the golang program, which will have the endianness of the host architecture.

// key.go

func makeKey(ipStr, portStr string) (key uint64, err error) {

ipBytes := net.ParseIP(ipStr).To4()

if ipBytes == nil {

return 0, fmt.Errorf("invalid IPv4 address: %s", ipStr)

}

// note: ipBytes is already in big endian network order,

// therefore we only need to convert its type to uint32.

// func NativeEndian.Uint32 will not cause any byteswap.

ip = binary.NativeEndian.Uint32(ipBytes)

portInt, err := strconv.Atoi(portStr)

if err != nil || portInt < 0 || portInt > 65535 {

return 0, fmt.Errorf("invalid port: %s", portStr)

}

// note: portInt has its bytes in the native endiannes of the

// architecture, but we need to explicity set it in BigEndian

// network order. This is why we call our hton converter func

port = hostToNet_uint16(uint16(portInt))

//the end key is a composite of address and port, both in big endian order.

key = (uint64(ip) << 16) | uint64(port)

return

}

// HostToNetShort converts a 16-bit integer from host to network byte order, aka "htons"

func hostToNet_uint16(i uint16) uint16 {

b := make([]byte, 2)

binary.NativeEndian.PutUint16(b, i)

return binary.BigEndian.Uint16(b)

}

End result

I combined together the experiments in this article into a webserver

that is now open source on github.

The projects itself is just a small experiment, and is now complete.

But this article you are reading right now is still

under development, and will probably improve over time.